Adding persistance to on premises Kubernetes cluster

The following is part of a series of posts called "Repurposing old equipment by building a Kubernetes cluster".

While old equipment by itself is in general not very useful unless you find a particular use case, by combining a number of old devices you can build a more powerful system that can span perhaps a number of use cases. Kubernetes is a perfect candidate to be able to do this. I had a number of old laptops laying about and decided to test this theory out.

- Creating a bare-bones on premises Kubernetes cluster from old hardware - 30 August 2019

- Adding persistance to on premises Kubernetes cluster - 1 September 2019

- Adding Helm to on premises Kubernetes cluster - 2 September 2019

- Adding an Ingress to on premises Kubernetes cluster without load balancer - 2 September 2019

- Adding Jenkins to on premises Kubernetes cluster via Helm - 4 September 2019

- Adding Prometheus to on premises Kubernetes cluster via Helm - 4 September 2019

One of the more difficult things with Kubernetes is correctly setting up persistance for stateful

services. The abstractions provided with storage classes, volume claims etc help a lot, but in most

cases you’re going to need to do some vendor specific setup. i.e. Running on

GKE means you’ll likely be using a gcePersistentDisk in your PersistentVolume.

With on premises, we could use something like Rook but I’m looking to keep things simple this time round and therefore I feel that the best choice is going with a NFS storage solution.

Before starting, I want the NFS provisioner to be the default storage class for any future

requirements. To do this, I need to enable the DefaultStorageClass admission plugin. This is done

by editing the /etc/kubernetes/manifests/kube-apiserver.yaml file on the master node, and adding

DefaultStorageClass to the comma separated values on the --enable-admission-plugins line.

kubelet supposedly watches these file periodically for changes which I feel is not very declarative

or a normal approach with Kubernetes. Not really sure on how to check the changes, although when I

look at the kube-apiserver pod, it appears to have been running only a few minutes which would

suggest the update has taken place pretty much immediately after I saved the configuration file.

Great!

Once this is done, it should mean that the storageclass.kubernetes.io/is-default-class annotation

on the StorageClass we’re about to create means that any PersistentVolumeClaim with an empty

StorageClass will automatically get an NFS volume provisioned.

One last thing here prior to adding the storage configuration to the cluster is that each node

needs nfs-common installed at the OS level in order to mount nfs volumes, so at this point I run

the following on all machines in the cluster to install nfs-common.

sudo apt-get install -y nfs-commonAlright, time to apply the storage configuration to the cluster.

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["services", "endpoints"]

verbs: ["get"]

- apiGroups: ["extensions"]

resources: ["podsecuritypolicies"]

resourceNames: ["nfs-provisioner"]

verbs: ["use"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-provisioner

subjects:

- kind: ServiceAccount

name: nfs-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-provisioner

subjects:

- kind: ServiceAccount

name: nfs-provisioner

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-provisioner

apiGroup: rbac.authorization.k8s.io

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-provisioner

---

kind: Service

apiVersion: v1

metadata:

name: nfs-provisioner

labels:

app: nfs-provisioner

spec:

ports:

- name: nfs

port: 2049

- name: mountd

port: 20048

- name: rpcbind

port: 111

- name: rpcbind-udp

port: 111

protocol: UDP

selector:

app: nfs-provisioner

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-provisioner

spec:

selector:

matchLabels:

app: nfs-provisioner

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-provisioner

spec:

serviceAccount: nfs-provisioner

containers:

- name: nfs-provisioner

image: quay.io/kubernetes_incubator/nfs-provisioner:latest

ports:

- name: nfs

containerPort: 2049

- name: mountd

containerPort: 20048

- name: rpcbind

containerPort: 111

- name: rpcbind-udp

containerPort: 111

protocol: UDP

securityContext:

capabilities:

add:

- DAC_READ_SEARCH

- SYS_RESOURCE

args:

- "-provisioner=home.com/nfs"

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: SERVICE_NAME

value: nfs-provisioner

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: export-volume

mountPath: /export

volumes:

- name: export-volume

hostPath:

path: /mnt/ssd/nfs

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- acepc

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-sc

annotations:

storageclass.kubernetes.io/is-default-class: true

provisioner: home.com/nfs

mountOptions:

- vers=4.1While it’s quite a large and involved configuration but if you read through it, there isn’t anything too complex or special in what it’s doing. Most of the configuration is related to setting up RBAC roles and bindings. The main points are:

- the provisioner name which is passed in can be anything. I’ve decided to just use home.com in

this instance because I couldn’t think up anything super intelligent when I put together the configuration.

- We’re passing in a bunch of information about the pod directly as envvars as the

nfs-provisioner

container requires them.

- We’re setting up a node affinity with the node with hostname acepc.

The reason for setting up affinity with acepc for this pod is due to the fact that acepc has a 250GB SSD installed.

For the moment I’m only using this drive for persistance, but in future I could add further drives attached to different nodes and set up other deployments to target the nodes that the new drives are attached to.

At this point I check that everything deployed ok, and there appears to be an error. The path of

/mnt/ssd/nfs doesn’t appear to exist. I quickly ssh into acepc directly and create the missing

nfs directory, delete the current pod to trigger the replication controller to re-create the pod

and after a few seconds, everything is up and running.

All looking good. Now to test it out. I choose to make a MySQL deployment to see what happens.

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-mysql-test-pvc

spec:

storageClassName: nfs-sc

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

ports:

- port: 3306

targetPort: 3306

protocol: TCP

selector:

app: mysql

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql

spec:

selector:

matchLabels:

app: mysql

strategy:

type: Recreate

template:

metadata:

labels:

app: mysql

spec:

containers:

- image: mysql:5.6

name: mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: password

ports:

- containerPort: 3306

name: mysql

volumeMounts:

- name: mysql-storage

mountPath: /var/lib/mysql

volumes:

- name: mysql-storage

persistentVolumeClaim:

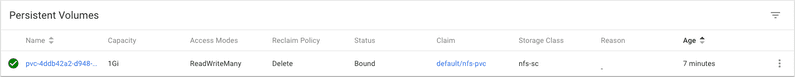

claimName: nfs-mysql-test-pvcI apply the above configuration and after a few minutes take a look at the persistent volumes, in Dashboard. Wowsers, there is a dynamically created volume now created for the MySQL deployment that I just launched.

Awesome, this means that anything requiring persistence I can now dynamically provision based on the

requirements. It’s worth mentioning at this point that the PersistentVolumeClaim sets an explicit

storageClassName, so I haven’t tested if the default storage class functionality has worked as yet.

Time to test it out MySQL in this context. I forward the MySQL instance to my local machine and connect to it via MySQL workbench.

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

mysql-64d7f649b-mpnjx 1/1 Running 0 109s

nfs-provisioner-78495f4f78-4qgcg 1/1 Running 0 33m

weave-scope-agent-weave-scope-test-68mwl 1/1 Running 0 20h

weave-scope-agent-weave-scope-test-f5m8h 1/1 Running 0 20h

weave-scope-agent-weave-scope-test-mrjt8 1/1 Running 0 20h

weave-scope-agent-weave-scope-test-mvptk 1/1 Running 0 20h

weave-scope-agent-weave-scope-test-x9rgp 1/1 Running 0 20h

weave-scope-agent-weave-scope-test-xzv6l 1/1 Running 0 20h

weave-scope-frontend-weave-scope-test-78d78cf9fd-5ql92 1/1 Running 0 20h

$ kubectl port-forward mysql-64d7f649b-mpnjx 3306:3306

Forwarding from 127.0.0.1:3306 -> 3306

Forwarding from [::1]:3306 -> 3306

Handling connection for 3306That takes care of the forwarding, and opening up workbench, I start a new session against

localhost:3306, put the password in that we set earlier in the deployment, and it connects.

I create a new schema and table in workbench and populate the table with some test data. All works

smoothly. As this is my first time setting up NFS, I want to dig a little deeper. Lets check out

the actual volume mount position. I ssh into acepc and go to the /mnt/ssd/nfs directory.

root@acepc:/mnt/ssd/nfs$ ls -la

total 32

drwxr-xr-x 3 root root 4096 Sep 3 16:14 .

drwxr-xr-x 4 root root 4096 Sep 3 08:09 ..

-rw-r--r-- 1 root root 9898 Sep 3 16:15 ganesha.log

-rw------- 1 root root 36 Sep 3 14:50 nfs-provisioner.identity

drwxrwsrwx 5 999 docker 4096 Sep 3 16:16 pvc-0c9221bc-1589-45be-8350-0403378bdceb

-rw------- 1 root root 902 Sep 3 16:14 vfs.conf

root@acepc:/mnt/ssd/nfs$ cd pvc-0c9221bc-1589-45be-8350-0403378bdceb/

root@acepc:/mnt/ssd/nfs/pvc-0c9221bc-1589-45be-8350-0403378bdceb$ ls -la

total 176152

drwxrwsrwx 5 999 docker 4096 Sep 3 16:16 .

drwxr-xr-x 3 root root 4096 Sep 3 16:14 ..

-rw-rw---- 1 999 docker 56 Sep 3 16:15 auto.cnf

-rw-rw---- 1 999 docker 79691776 Sep 3 16:19 ibdata1

-rw-rw---- 1 999 docker 50331648 Sep 3 16:19 ib_logfile0

-rw-rw---- 1 999 docker 50331648 Sep 3 16:15 ib_logfile1

drwx--S--- 2 999 docker 4096 Sep 3 16:15 mysql

drwx--S--- 2 999 docker 4096 Sep 3 16:18 new_schema

drwx--S--- 2 999 docker 4096 Sep 3 16:15 performance_schemaThat looks how I’d expect. We can see the directory for the volume claim there and internally it is most definitely a MySQL database.

Lets see if the data is hosted correctly across pods. I find the current MySQL pod which is running

on lenovo, delete it and the replication controller restarts the pod again on dell.

I port-forward the new pod, reconnect MySQL workbench and it appears that the schema is intact. That means that NFS is able to persist the current data and move it between machines.

For interest, what happens if I restart the nfs-provisioner? This will be a good test to see how robust things are in a pretty significant failure.

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

mysql-64d7f649b-hpv8j 1/1 Running 0 14m

nfs-provisioner-78495f4f78-4qgcg 1/1 Running 0 59m

weave-scope-agent-weave-scope-test-68mwl 1/1 Running 0 21h

weave-scope-agent-weave-scope-test-f5m8h 1/1 Running 0 21h

weave-scope-agent-weave-scope-test-mrjt8 1/1 Running 0 21h

weave-scope-agent-weave-scope-test-mvptk 1/1 Running 0 21h

weave-scope-agent-weave-scope-test-x9rgp 1/1 Running 0 21h

weave-scope-agent-weave-scope-test-xzv6l 1/1 Running 0 21h

weave-scope-frontend-weave-scope-test-78d78cf9fd-5ql92 1/1 Running 0 21h

$ kubectl delete pod/nfs-provisioner-78495f4f78-4qgcg

pod "nfs-provisioner-78495f4f78-4qgcg" deletedAgain, I redo the port-forward, open MySQL workbench and everything is still there. Nice! At this point I’m confident that dynamic NFS provisioning is working well.

The following links I found really helpful regarding setting up NFS:

- https://github.com/kubernetes/examples/blob/master/staging/volumes/nfs/nfs-server-rc.yaml

- https://github.com/carlosedp/kubernetes-arm/blob/master/3-NFS_Storage/3-deployment-arm.yaml

- https://kubernetes.io/blog/2018/04/13/local-persistent-volumes-beta/

- https://medium.com/@carlosedp/building-a-hybrid-x86-64-and-arm-kubernetes-cluster-e7f94ff6e51d

— edit —

I didn’t test out the default storage class provisioning during this post, but see the post regarding setting up Jenkins in this series of posts as I tested it there and it works.